Monday, April 19, 2010

New Stuff

Sorry that I haven't updated in quite some time...just not on my major priority list of things to do. (and the pay just isn't what it used to be...)

I am looking at perhaps a new way of doing things, so if you can bear with me for a short time, I will do my best to get some new stuff up here.

Thanks

Thursday, January 22, 2009

General Commands for Navisphere CLI

Physical Container-Front End Ports Speeds

naviseccli –h 10.124.23.128 port –list -sfpstate

naviseccli –h 10.124.23.128 –set sp a –portid 0 2

naviseccli –h 10.124.23.128 backendbus –get –speeds 0

SP Reboot and Shutdown GUI

naviseccli –h 10.124.23.128 rebootsp

naviseccli –h 10.124.23.128 resetandhold

Disk Summary

naviseccli –h 10.124.23.128 getdisk

naviseccli –h 10.124.23.128 getdisk 0_0_9 (Bus_Enclosure_Disk - specific disk)

Storage System Properties- Cache Tab

naviseccli –h 10.124.23.128 getcache

naviseccli –h 10.124.23.128 setcache –wc 0 –rca 0 –rcb 0 (to disable Write and Read Cache)

naviseccli –h 10.124.23.128 setcache –p 4 –l 50 –h 70 (Set Page Size to 4 KB, Low WaterMark to 50%, and High WaterMark to 70%)

naviseccli –h 10.124.23.128 setcache –wc 1 –rca 1 –rcb 1 (to enable Write and Read Cache)

Storage System Properties- Memory Tab

naviseccli –h 10.124.23.128 setcache –wsz 2500 –rsza 100 –rszb 100

naviseccli –h 10.124.23.128 setcache –wsz 3072 –rsza 3656 –rszb 3656 (maximum amount of cache for CX3-80)

Creating a RAID Group

naviseccli –h 10.124.23.128 createrg 0 1_0_0 1_0_ 1 1_0_2 1_0_3 1_0_4 –rm no –pri med (same Enclosure)

-rm (remove/destroy Raid Group after the last LUN is unbound for the Raid Group) -pri (priority/rate of expansion/defragmentation of the Raid Group)

naviseccli –h 10.124.23.128 createrg 1 2_0_0 3_0_0 2_0_1 3_0_1 2_0_2 3_0_2 -raidtype r1_0 (for RAID 1_0 across enclosures)

RAID Group Properties - General

naviseccli –h 10.124.23.128 getrg 0

RAID Group Properties - Disks

naviseccli –h 10.124.23.128 getrg 0 –disks

Binding a LUN

naviseccli –h 10.124.23.128 bind r5 0 –rg 0 –rc 1 –wc 1 –sp a –sq gb –cap 10

bind raid type (r0, r1, r1_0, r3, r5, r6) -rg (raid group) -rc / -wc (read and write cache) -sp (storage processor) -sq (size qualifier - mb, gb, tb, bc (block count) -cap (size of the LUN)

LUN Properties

naviseccli –h 10.124.23.128 getlun 0

naviseccli –h 10.124.23.128 chglun –l 0 –name Exchange_Log_Lun_0

RAID Group Properties - Partitions

naviseccli –h 10.124.23.128 getrg 0 –lunlist

Destroying a RAID Group

naviseccli –h 10.124.23.128 removerg 0

Creating a Storage Group

navicli –h 10.127.24.128 storagegroup –create –gname ProductionHost

Storage Group Properties - LUNs with Host ID

navicli –h 10.127.24.128 storagegroup –addhlu –gname ProductionHost –alu 6 –hlu 6

navicli –h 10.127.24.128 storagegroup –addhlu –gname ProductionHost –alu 23 –hlu 23

Storage Group Properties - Hosts

navicli –h 10.127.24.128 storagegroup –connecthost –host ProductionHost –gname ProductionHost

Destroying Storage Groups

navicli –h 10.127.24.128 storagegroup –destroy –gname ProductionHost

Thursday, October 9, 2008

Verifying RAID Group Disk Order

The examples above are from an output of running the get Raid Group command from Navisphere Command Line Interface.

Both RAID Groups are configured as Raid type 1_0.

In an earlier blog we discussed the importance of configuring RAID 1_0 by separating the Data disks and Mirrored Disks across multiple buses and enclosures on the back of the Clariion. This diagram is to show how you could verify if a RAID 1_0 Group is configured correctly or incorrectly.

The reason we are showing the output of the RAID Groups from the command line is this is the only place to truly see if the RAID Groups were configured properly.

The GUI will show the disks as the Clariion sees them in the order of the Bus and Enclosure, not the order you have placed the disks in the RAID Group.

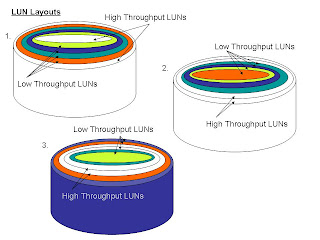

LUN Layouts

LUN Layout

This diagram shows three different ways in which the same 6 LUNs could be laid out on a RAID Group

In example number 1, the two heavily utilized LUNs have been placed at the beginning and end of the LUNs in the RAID Group, meaning they were the first and last LUNs created on the RAID Group, with lightly utilized LUNs between them. Why this could be a disadvantage to the LUNs, RAID Group, Disks, is that Example 1 would see a much higher rate of Seek Distances at the Disk Level. With a higher Seek Distance rate, comes greater latency, and longer response times for the data. The head has to travel, on average a greater distance between the two busiest LUNs across the disks.

Example 2 has the two heavily utilized LUNs adjacent to each other at the beginning of the RAID Group. While this is the best case scenario for the two busiest LUNs, it could also result in high Seek Distances at the Disk Level because the head would be traveling between the busiest LUNs and then seeking a great distance on the disk when access is needed to the less needed LUNs.

Example 3 shows the heavily utilized LUNs placed in the center of the RAID Group. The advantage to this configuration is the head of the disk would remain between the two busiest LUNs, and then would have a much shorter seek distance to the less utilized LUNs on the outer and inner edge of disks.

The problem with these types of configurations, is that for the most part, it is too late to configure the LUNs in such a way. However, with the use of LUN Migrations in Navisphere, and enough unallocated Disk Space, this could be accomplished while having the LUNs online to the hosts. You will however see an impact on the performance of these LUNs during this Migration process.

But, if performance is an objective, it could be worth it in the long run to make the changes. When LUNs and RAID Groups are initially configured, we usually don’t know what type of Throughput to expect. After monitoring and using Navisphere Analyzer, we could at a later time, begin to move LUNs with heavier needs off of the same Raid Groups, and onto Raid Groups with LUNs not so heavily accessed.

Tuesday, March 18, 2008

Stripe Size of a LUN

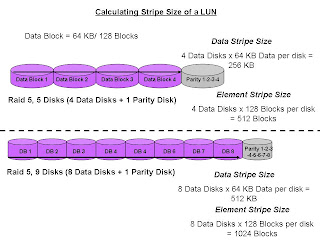

Calculating the Stripe Size of a LUN

To calculate the size of a stripe of data that the Clariion writes to a LUN, we must know how many disks make up the Raid Group, as well as the Raid Type, and how big a chunk of data is written out to a disk. In the illustration above, we have two examples of Stripe Size of a LUN.

The top example shows a Raid 5, five disk Raid Group. We usually hear this referred to as 4 + 1. That means that of the five disks that make up the Raid Group, four of the disks are used to store the data, and the remaining disk is used to store the parity information for the stripe of data in the event of a disk failure and rebuild. Let’s base this on the Clariion settings of a disk format in which it formats the disk into 128 blocks for the Element Size (amount of blocks written to a disk before writing/striping to the next disk in the Raid Group), which is equal to the 64 KB Chunk Size of data that is written to a disk before writing/striping to the next disk in the Raid Group. (see blog titled DISK FORMAT)

To determine the Data Stripe Size, we simply calculate the number of disks in the Raid Group for Data (4) x the amount of data written per disk (64 KB), and get the amount of data written in a Raid 5, Five disk Raid Group (4 + 1) as 256 KB of data. To get the Element Stripe Size, we calculate the number of disks in the Raid Group (4) x the number of blocks written per disk (128 blocks) and get the Element Stripe Size of 512 blocks.

The bottom example illustrates another Raid 5 group, however the number of disks in the Raid Group is nine (9). This is often referred to as 8 + 1. Again, eight (8) disks for data, and the remaining disk is used to store the parity information for the stripe of data.

To determine the Data Stripe Size, we simply calculate the number of disks in the Raid Group for Data (8) x the amount of data written per disk (64 KB), and get the amount of data written in a Raid 5, Five disk Raid Group (8 + 1) as 512 KB of data. To get the Element Stripe Size, we calculate the number of disks in the Raid Group (8) x the number of blocks written per disk (128 blocks) and get the Element Stripe Size of 1024 blocks.

The confusion usually comes across in the terminology. The Stripe Size again is the amount of data written to a stripe of the Raid Group, and the Element Stripe Size is the number of blocks written to a stripe of a Raid Group.

Monday, March 17, 2008

Setting the Alignment Offset on ESX Server and a (Virtual) Windows Server

Setting the Alignment Offset on ESX Server and a (Virtual) Windows Server

To add to the layer of confusion, we must discuss what needs to be done when assigning a LUN to an ESX Server, and then creating the (virtual) disk that will be assigned to the (Virtual) Windows Server.

As stated in the previous blog titled Disk Alignment, we must align the data on the disks before any data is written to the LUN itself. We align the LUN on the ESX Server because of the way in which a Clariion Formats the Disks in the 128 blocks per disk (64 KB Chunk) and the metadata written to the LUN from the ESX Server. Although, it is my understanding that ESX Server v.3.5 takes care of the initial offset setting of 128.

The following are the steps to align a LUN for Linux/ESX Server:

Execute the following steps to align VMFS

1. On service console, execute “fdisk /dev/sd

2. Type “n” to create a new partition

3. Type “p” to create a primary partition

4. Type “1” to create partition #1

5. Select the defaults to use the complete disk

6. Type “x” to get into expert mode

7. Type “b” to specify the starting block for partitions

8. Type “1” to select partition #1

9. Type “128” to make partition #1 to align on 64KB boundary

10.Type “r” to return to main menu

11.Type “t” to change partition type

12. Type “1” to select partition 1

13. Type “fb” to set type to fb (VMFS volume)

14. Type “w” to write label and the partition information to disk

Now, that the ESX Server has aligned it’s disk, when the cache on the Clariion starts writing data to the disk, it will start writing data to the first block on the second disk, or block number 128. And, because the Clariion formats the disks in 64 KB Chunks, it will write one Chunk of data to a disk.

If we create a (Virtual) Windows Server on the ESX Server, we must take into account that when Windows is assigned a LUN, it will also want to write a signature to the disk. We know that it is a Virtual Machine, but Windows doesn’t know that. It believes it is a real server. So, when Windows grabs the LUN, it will write it’s signature to the disk. See blog titled DISK ALIGNMENT. Again, the problem is that the Windows Signature will take up 63 blocks. Starting at the first block (Block # 128) on the second disk in the RAID Group, the Signature will write halfway across the second disk in the raid group. When Cache begins to write the data out to disk, it will write to the next available block, which is the 64th block on the second disk. In the top illustration, we can see that a 64 KB Data Chunk that is written out to disk as one operation will now span two disks, a Disk Cross. And from here on out for that LUN, we will see a Disk Cross because there was no offset set on the (Virtual) Windows Server.

In the bottom example, we see how the offset was set for the ESX Server, the offset was also set on the (Virtual) Windows Server, and now Cache will write out to a single disk in 64 KB Data Chunks, therefore limiting the number of Disk Crosses.

Again, from the (Virtual) Windows Server we can set the offset for the LUNs using either Diskpart or Diskpar.

To set the alignment using Diskpart, see the earlier Blog titled Setting the Alignment Offset for 2003 Windows Servers(sp1).

To set the alignment using Diskpar:

C:\ diskpar –s 1

Set partition can only be done on a raw drive.

You can use Disk Manager to delete all existing partitions

Are you sure drive 1 is a raw device without any partition? (Y/N) y

----Drive 1 Geometry Information ----

Cylinders = 1174

TracksPerCylinder = 255

SectorsPerTrack = 63

BytesPerSector = 512

DiskSize = 9656478720 (Bytes) = 9209 (MB)

We are going to set the new disk partition.

All data on this drive will be lost. Continue (Y/N) ? Y

Please specify the starting offset (in sectors) : 128

Please specify the partition length (in MB) (Max = 9209) : 5120

Done setting partition

---- New Partition information ----

StatringOffset = 65536

PartitionLength = 5368709120

HiddenSectors = 128

PartitionNumber = 1

PartitionType = 7

As it shows in the bottom illustration from above, the ESX server has set an offset, the (Virtual) Windows Machine has written it’s signature, and has set the offset to start writing data to the first block on the third disk in the Raid Group.

Thursday, February 14, 2008

Subscribe to:

Posts (Atom)