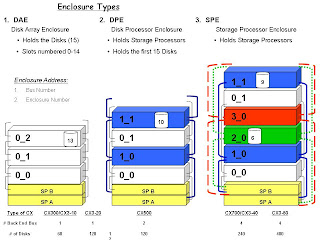

Enclosure Types

The above page diagrams the back-end structure of a Clariion. How the disks are laid out. Before we discuss the back-end bus structure, we should discuss the different types of enclosures that the Clariion contains.

1.DAE. The Disk Array Enclosure. Disk Array Enclosures exist in all Clariions. DAE’s are the enclosures that house the disks in the Clariion. Each DAE is holds fifteen (15) disks. The disks are in slots that are numbered 0 to 14.

2.DPE. The Disk Processor Enclosure. The Disk Processor Enclosure is in the Clariion Models CX300, CX400, CX500. The DPE is made up of two components. It contains the Storage Processors, and the first fifteen (15) disks of the Clariion.

3.SPE. The Storage Processor Enclosure. The Storage Processor Enclosure is in the Clariion Models CX700 and the CX-3 Series. The SPE is the enclosure that houses the Storage Processors.

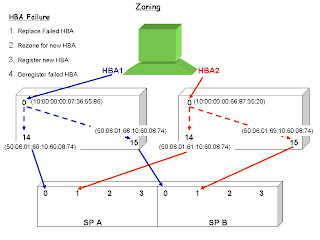

The diagrams above lay out the DAE’s back-end bus structure. Data that leaves Cache and is written to disk, or data that is read from disk and placed into Cache travels along these back-end buses or loops. Some Clariions have one back-end bus/loop to get data from enclosure to enclosure. Others have two and four back-end buses/loops to push and pull data from the disks. The more buses/loops, the more expected throughput for data on the back-end of the Clariion.

The Clariion Model on the left is a diagram of a CX300/CX3-10 and CX3-20. These models have a single back-end bus/loop to connect all of the enclosures. The CX300 will have one back-end bus/loop running at a speed of 2 GB/sec, while the CX3-Series Clariions have the ability to run up to 4 GB/sec on the back-end.

The Clariion Model in the middle is a diagram of a CX500. The CX500 has two back-end buses/loops. This gives the CX500, twice the amount of potential throughput for I/Os than the CX300.

The Clariion Model on the right is a diagram of a CX700, CX3-40 and CX3-80. These Clariions contain four back-end buses/loops. The CX3-80 will contain the maximum back-end throughput with all four buses having the ability to run at a 4 GB/sec speed.

Each enclosure has a redundant connection for the bus that it is connected. This is in the event that the Clariion loses a Link Control Card (LCC) that allow the enclosures to move data, or the loss of a Storage Processor. You will see one bus cabled out of SP A and SP B, allowing both SP’s access to each enclosure.

Enclosure Addresses

To determine an address of an enclosure, we need to know two things, what bus it is on, and what number enclosure it is on that bus. On the Clariions in the left diagram, there is only one back-end bus/loop. Every enclosure on these Clariions will be on Bus 0. The enclosure numbers start at zero (0) for the first enclosure and work their way up. On these Clariions, the first enclosure of disks is labeled Bus 0_Enclosure 0 (0_0). The next enclosure of disks is going to be Bus 0_Enclosure 1 (0_1). The next enclosure of disks 0_2, and so on.

The CX500, with two back-end buses will alternate enclosures with the buses. The first enclosure of disks will be the same as the Clariions on the left of Bus 0_Enclosure 0 (0_0). The next enclosure of disks will utilize the other back-end bus/loop, Bus 1. This enclosure is Bus 1_Enclosure 0 (1_0). It is Enclosure 0, because it is the first enclosure of disks on Bus 1. The third enclosure of disks is going to be back on Bus 0, 0_1. The next one up is on Bus 1, 1_1. The enclosures will continue to alternate until the Clariion has all of the supported enclosures. You might ask why it is cabled this way, alternating buses. The reason being is that most companies don’t purchase Clariions fully populated. Most companies buy disks on an as needed basis. By alternating enclosures, you are using all of the back-end resources available for that Clariion.

The last topic for this page are the disks themselves. To find a specific disk’s address, we use the Enclosure Address and add the Slot number the disk is in. This gives us the address that is called the B_E_D. Bus_Enclosure_Disk. The Clariion on the left has a disk in slot number 13. The address of that disk would be 0_2_13. The Clariion in the middle has a disk in slot number 10 of Enclosure 1_1. This disk address would be 1_1_10. And the Clariion on the right has a disk in Bus 2_Enclosure 0. It’s address is 2_0_6. And the disk in Bus 1_Enclosure 1 is in slot 9. Address = 1_1_9.

Finally, each Clariion has a limit to the number of disks that it will support. The chart below the diagrams provides the number of how many disks each model can contain. The CX300 can have a maximum of 60 disks, whereas the CX3-80 can have up to 480 disks.

The importance of this page is to know where the disks live in the back of the Clariion in the event of disk failures, and more importantly how you are going to lay out the disks. Meaning, what applications on going to be on certain disks. In order to put that data onto disks, we have to create LUNs (will get to it), which are carved out of RAID Groups (again, getting there shortly). RAID Groups are a grouping of disks. To have a nice balance and to achieve as much performance and throughput on the Clariion, we have to know how the Clariion labels the disks and how the DAE’s are structured.