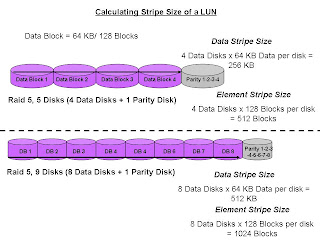

Calculating the Stripe Size of a LUN

To calculate the size of a stripe of data that the Clariion writes to a LUN, we must know how many disks make up the Raid Group, as well as the Raid Type, and how big a chunk of data is written out to a disk. In the illustration above, we have two examples of Stripe Size of a LUN.

The top example shows a Raid 5, five disk Raid Group. We usually hear this referred to as 4 + 1. That means that of the five disks that make up the Raid Group, four of the disks are used to store the data, and the remaining disk is used to store the parity information for the stripe of data in the event of a disk failure and rebuild. Let’s base this on the Clariion settings of a disk format in which it formats the disk into 128 blocks for the Element Size (amount of blocks written to a disk before writing/striping to the next disk in the Raid Group), which is equal to the 64 KB Chunk Size of data that is written to a disk before writing/striping to the next disk in the Raid Group. (see blog titled DISK FORMAT)

To determine the Data Stripe Size, we simply calculate the number of disks in the Raid Group for Data (4) x the amount of data written per disk (64 KB), and get the amount of data written in a Raid 5, Five disk Raid Group (4 + 1) as 256 KB of data. To get the Element Stripe Size, we calculate the number of disks in the Raid Group (4) x the number of blocks written per disk (128 blocks) and get the Element Stripe Size of 512 blocks.

The bottom example illustrates another Raid 5 group, however the number of disks in the Raid Group is nine (9). This is often referred to as 8 + 1. Again, eight (8) disks for data, and the remaining disk is used to store the parity information for the stripe of data.

To determine the Data Stripe Size, we simply calculate the number of disks in the Raid Group for Data (8) x the amount of data written per disk (64 KB), and get the amount of data written in a Raid 5, Five disk Raid Group (8 + 1) as 512 KB of data. To get the Element Stripe Size, we calculate the number of disks in the Raid Group (8) x the number of blocks written per disk (128 blocks) and get the Element Stripe Size of 1024 blocks.

The confusion usually comes across in the terminology. The Stripe Size again is the amount of data written to a stripe of the Raid Group, and the Element Stripe Size is the number of blocks written to a stripe of a Raid Group.