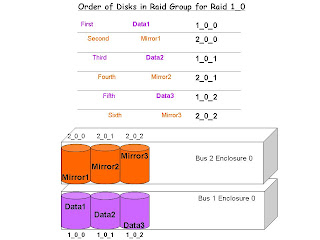

Order of Disks in RAID Group for RAID 1_0.

When creating a RAID 1_0 Raid Group, it is important to know and understand the order of the drives as they are put into the RAID Group will absolutely make a difference in the Performance and Protection of that RAID Group. If left to the Clariion, it will simply choose the next disks in the order in which is sees the disks to create the RAID Group. However, this may not be the best way to configure a RAID 1_0 RAID Group. Navisphere will take the next disks available, which are usually right next to one another in the same enclosure.

In a RAID 1_0 Group, we want the RAID Group to span multiple enclosures as illustrated above. The reason for this is as we can see, the Data Disks will be on Bus 1_Enclosure 0, and the Mirrored Data Disks will be on Bus 2_Enclosure 0. The advantage of creating the RAID Group this way is that we place the Data and Mirrors on two separate enclosures. In the event of an enclosure failure, the other enclosure could still be alive and maintaining access to the data or the mirrored data. The second advantage is Performance. Performance could be gained through this configuration because you are spreading the workload of the application across two different buses on the back of the Clariion.

Notice the order in which the disks were placed into the RAID 1_0 Group. In order to for the disks to be entered into the RAID Group in this order, they must be manually entered into the RAID Group this way via Navisphere or the Command Line.

The first disk into the RAID Group receives Data Block 1.

The second disk into the RAID Group receives the Mirror of Data Block 1.

The third disk into the RAID Group receives Data Block 2.

The fourth disk into the RAID Group receives the Mirror of Data Block 2.

The fifth disk into the RAID Group received Data Block 3.

The sixth disk into the RAID Group receives the Mirror of Data Block 3.

If we let the Clariion choose these disks in its particular order, it would select them:

First disk – 1_0_0 (Data Block 1)

Second disk – 1_0_1 (Mirror of Data Block 1)

Third disk – 1_0_2 (Data Block 2)

Fourth disk – 2_0_0 (Mirror of Data Block 2)

Fifth disk – 2_0_1 (Data Block 3)

Sixth disk – 2_0_2 (Mirror of Data Block 3)

This defeats the purpose of having the Mirrored Data on a different enclosure than the Data Disks.

11 comments:

When I created the rg in the described manner the disks show up correct in the GUI but when I run the get rg from the CLI they show up this way:

List of disks: Bus 1 Enclosure 0 Disk 0

Bus 1 Enclosure 0 Disk 1

Bus 1 Enclosure 0 Disk 2

Bus 2 Enclosure 0 Disk 0

Bus 2 Enclosure 0 Disk 1

Bus 2 Enclosure 0 Disk 2

That being said, which is the best way to check that the rg is set up correctly for performance/redundancy?

ouch...what that shows from the command line is that it is not set up the correct way...you should see..

naviseccli -h ..... getrg

Bus 1 Enclosure 0 Disk 0

Bus 2 Enclosure 0 Disk 0

Bus 1 Enclosure 0 Disk 1

Bus 2 Enclosure 0 Disk 1

Bus 1 Enclosure 0 Disk 2

Bus 2 Enclosure 0 Disk 2

the only true way to verify a Raid Group order is via the command line. The GUI will show the disks in the order in which the Clariion sees them...not how they were put in the Raid Group.

If you want to do it from the command line, it would look something like this...

naviseccli -h ...... createrg (whatever number Raid Group) 1_0_0 2_0_0 1_0_1 2_0_1 1_0_2 2_0_2 -raidtype r1_0

So... I've created a rg using the command line as you suggest and when you look at it from the command line everything looks good up until I bind a LUN. Once I bind a LUN the order in which the disks show up from the command line changes. Any ideas?

Sorry.. it changes from this:

Bus 1 Enclosure 0 Disk 0

Bus 2 Enclosure 0 Disk 0

Bus 1 Enclosure 0 Disk 1

Bus 2 Enclosure 0 Disk 1

Bus 1 Enclosure 0 Disk 2

Bus 2 Enclosure 0 Disk 2

To this:

Bus 1 Enclosure 0 Disk 0

Bus 1 Enclosure 0 Disk 1

Bus 1 Enclosure 0 Disk 3

Bus 2 Enclosure 0 Disk 0

Bus 2 Enclosure 0 Disk 1

Bus 2 Enclosure 0 Disk 3

Yes, this is brand new to Clariion World starting with the CX4s and Flare 28. A ticket has been opened with EMC regarding this. I have been trying to get it to work and I keep getting all sorts of stuff happening...for instance...I created it like such:

Bus 1 Enclosure 0 Disk 0

Bus 1 Enclosure 0 Disk 1

Bus 1 Enclosure 0 Disk 2

Bus 2 Enclosure 0 Disk 0

Bus 2 Enclosure 0 Disk 1

Bus 2 Enclosure 0 Disk 2

the incorrect way...and here is the output when a LUN was bound

Bus 1 Enclosure 0 Disk 0

Bus 1 Enclosure 0 Disk 1

Bus 2 Enclosure 0 Disk 0

Bus 2 Enclosure 0 Disk 1

Bus 1 Enclosure 0 Disk 2

Bus 2 Enclosure 0 Disk 2

NO FREAKING CLUE...will keep you updated as I hear things

How would you configure the disk in a raid group if you have a four bus system?

Im really interested to hear an answer to Anonymous's last question.

in a four-bus system, i would still only use raid 1_0 across two buses...but make two separate raid groups...for instance, one raid group going between bus 1 and bus 2, and on raid group between bus 3 and bus 0...then creating luns on the two raid groups, and making a striped metalun between the raid groups...

Great answer. Although EMC has said in the past that going across multiple buses like that wouldn’t really increases performance but that there is no real negative in doing it. I think in Release 23 (which is old) it did state that doing it increases reliability to 99.999%.

I will give you my horrible experience:

Striping across 4 buses is absolutely the most redundant configuration that I could think of until I found out how the system handles an LCC failure. I have a CX8-80 with 32 DAE's and all disks full. I had a failure on the B side LCC on Bus 0 Enclosure 2 which in turn failed the path on Bus 0 Enclosures 2-7. The way an LCC handles a failure is if the RAID group can handle the failures of the disks on the effected enclosures then it will fail the disks in lieu of doing a LUN trespass. In my case I only had 1 disk per Raid group on a DAE so it wound up failing 90 disks at the same time. had there been 2 disks per Raid group on that DAE then the LUN would have trespassed.

The big problem came when the LCC was reseated and it came back online. at that point all 90 disks started there rebuild process and all LUN's were set to ASAP for their rebuild priority. This halted performance and our applications were barely functional for about 2.5 days. An LCC is a more common failure than an entire bus so we are now looking to re-layout our data to not do vertical striping of our RAID groups. This was a tough lesson learned and a lot of back and forth with EMC and some of their engineering folks. Almost everyone we dealt with at EMC was learning this along with us. It seemed to be a very obscure scenario and there wasn’t much information regarding it. There is a Primus that outlines it but it was not easy to find. Although I believe EMC should change their failure handling logic for this scenario we are making adjustments to accommodate their newly enforced best practices.

I get this error when i try to create raid group with disk 0_5 1_5 0_6 1_6

The RAID group being selected (RAID 1/0, RAID 1) includes disks that are located both in Bus 0 Enclosure 0 as well as some other enclosure(s). This configuration could experience Rebuilds of some disk drives following a power failure event, because Bus 0 Enclosure 0 runs longer than other enclosures during a power failure event. An alternate configuration where the disks in the RAID group are not split between enclosures in this manner, is recommended.

have someone an update regarding the order of the disks? Got the same "problem" 04.30.000.5.509

Post a Comment